Adapting test strategies to IoT

But this shift raises a number of issues for effective validation and verification. A key difference between devices designed for the IoT and classic embedded systems lies in the explosion of their possible use-cases. Traditionally, embedded systems were often designed to run in a standalone context or if they were networked would run in a small set of predefined contexts. Such designs could be supported by a relatively straightforward strategy of testing at the unit level, followed by testing the integration of those units and finally testing at the subsystem and system levels.

The IoT redefines the concept of ‘system’. It brings with it the possibility of building much larger-scale, emergent systems in which interactions between independently designed devices on the network deliver the system functions. Incorrect functionality within one device can result in unpredictable behaviour at the system level, or may have little to no impact because other devices in the system can respond appropriately.

A number of IoT applications rely on the concept of sensor fusion in which readings from many different sources are combined to build what should be a more accurate picture of what is happening around them. Errant devices may inject noise or incorrect data into the network that causes other devices to respond inappropriately, resulting in the wrong outputs being generated.

Because IoT devices will be designed independently it is almost impossible for the device design team to test for specific problems caused by other systems. Even if some of those systems are available for test before product delivery, a fundamental tenet of the IoT is that new applications will be developed over time that may stress the device in unforeseen ways.

The problems of testing for the IoT raise issues of responsibility. Who is at fault if a single device starts emitting erroneous data that causes a gateway device to report non-existent events that destabilise the control loops used by other components of the system, causing a failure that leads to an accident?

Next: Who is responsible?

Is it the vendor of the faulty device, is it the developer of the gateway for failing to filter the bad data or the controllers for being unable to cope with extraneous events? A large number of IoT systems will also need to be able to cope with user-written software. Those used in home automation, for example, may be controlled by a combination of downloadable apps and user-written or customised scripts. Errors in these may, if not guarded against, could cause IoT nodes to behave unpredictably.

Developers of IoT devices not only need to consider the stability of their design when used in a networked context but their vulnerability. When things become financially important, they will become enticing targets to hackers.

Even those without a direct financial benefit for successful attackers, some devices may provide an avenue for hackers to gain personal data that can be used for phishing attempts or simply be attractive targets for digital vandalism. In the wake of security disclosures about an internet-enabled thermostat, showing how it was possible to load a web page showing the password it required, some users reported their devices misbehaving. In one case, a home user woke up in the early morning to find theirs had been set to 35°C.

In the world of personal computers and servers, the idea of regularly patching the software to counter types of attacks as they become known has become entrenched. But devices that do not have any form of high-bandwidth connection to the internet or which cannot suffer the downtime associated with a firmware update and reboot cannot realistically be treated the same way.

Next: trusted firmware

An IoT device may have no high-bandwidth connection to load new software other than a custom connector inside the package that was used during factory configuration, and after commissioning in the field may be installed out of easy reach.

Because many of the devices will often be practically inaccessible, the “patch and pray” strategy used for many desktop software packages is unlikely to be an effective strategy for many forms of IoT device. They will need to be shown to be secure against a wide range of attacks. Patching can only be used for extreme situations where certain types of hack were unforeseeable at the time of design.

Because of the factors outlined above, there is a strong requirement for the firmware inside IoT devices to demonstrate trustworthiness. This has led, in the UK, to the release of a standard designed to improve the ability of software to avoid failures and resist attacks. The Trustworthy Software Initiative has backed the British Standards Institute’s PAS 754:2014 standard, which identifies five aspects of software trustworthiness: safety, reliability, availability, resilience and security.

The BSI document describes a widely applicable approach to achieving software trustworthiness rather than mandating any specific practices or procedures. The standard calls for an appropriate set of governance and management measures to be set up before producing or using any software which has a trustworthiness requirement.

Under the regime, design teams need to perform risk assessments that consider the set of assets to be protected, the nature of the adversities that may be faced and the way in which the software may be susceptible to such adversities. To manage that risk, appropriate personnel, physical, procedural and technical controls need to be deployed. Finally, PAS 754 demands a regime be set up to ensure that creators and users of software ensure that governance, risk and control decisions have been implemented.

Where devices are likely to be incorporated into systems that have a safety aspect, certification to one of the relevant standards will be needed. This may be a generic standard such as IEC61508 or a domain-specific standard derived from it such as ISO 26262 that has been embraced by many of the automotive OEMs.

Next: SIL and ASIL

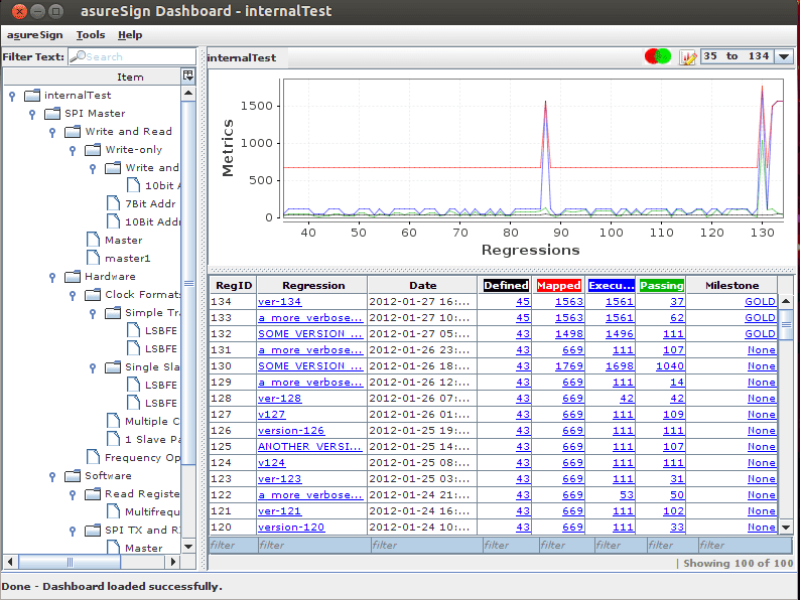

A key element of the safety standards is the appropriate selection of a safety integrity level (SIL) to act as a guide for the degree to which functionality needs to be tested during design, during production and in the field, as built-in self-test mechanisms may be needed to ensure the device is fit for purpose. For example, a sensor node may include software to check its inputs are within bounds and not indicating a failure caused by too much dirt or a lost connection.

SILs differ slightly according to the relevant standard but follow a similar structure. In ISO 26262, for example, the automotive SIL (ASIL) ratings cover the four letters A to D, as well as QM for no safety impact. ASIL A is for systems that have little safety impact up to D for the most critical functions, such as brakes and steering. In IEC 61508, the SILs are numbered but cover similar grading of criticality.

The SIL determines the level to which each system needs to be tested. But to ensure that external problems cannot upset the more safety-critical systems, the engineering team has to go through a process of determining what could possibly affect the system they are designing, including events from external equipment.

This calls for functions that check inputs as well as outputs for problems so that errant data does not cause the system to react unpredictably. An overarching test strategy needs to support those higher-level tests as well as the unit tests that will usually be performed during function creation and integration.

Although the additional level of testing required for IoT may seem to be a burden that is difficult to support, research into software costs has shown that this attention to detail can pay off commercially. In a seminal paper published in IEEE Computer in 2001, Barry Boehm and Victor Basili of the University of Southern California and the University of Maryland, respectively, found that it costs 50 per cent more per source instruction to develop high-dependability software products than to develop low-dependability software products.

But, using the Cocomo II maintenance model, they found low-dependability software costs about 50 per cent per instruction more to maintain than to develop. High-dependability software on the other hand costs 15 per cent less to maintain than to develop. Making IoT systems more resistant to problematic external code and events – and thus avoiding inconvenient reflashing of the device – is likely lead to much lower maintenance costs than for systems where those precautions have not been taken.

The IoT will do much to increase the level of automated intelligence around us. It will also, because of this, change the way embedded systems developers handle validation and verification.

About the authors:

Mike Bartley is Founder and CEO of Test and Verification Solutions Ltd (TVS) – www.testandverification.com

Declan O’Riordan is Head of Security Testing at TVS.

If you enjoyed this article, you will like the following ones: don't miss them by subscribing to :

eeNews on Google News

If you enjoyed this article, you will like the following ones: don't miss them by subscribing to :

eeNews on Google News