SoC silicon – Powerful building block to enable soft networks

The mobile Internet and the proliferation of smart devices have significantly changed the way people use networks. Smart devices have enabled people to work and interact with each other anytime, anywhere. Mobile Internet consumer behavior is characterized by a thirst for new content, particularly location aware content, social network interaction, image sharing, posting and streaming video.

Parallel to the well documented consumer bandwidth explosion, is an emerging trend called “the internet of things” where machine-to-machine (M2M) communication is used to monitor control and automate everything from industrial controls to security monitoring. Most of this M2M traffic is low volume (with the exception of video traffic), but the sheer number of devices that the network will need to track will impact the overall capacity. Together these trends are reshaping the landscape of the telecom network infrastructure and the application servers that provide content and machine-to-machine functionality.

The rapid increase in network demands is putting a heavy burden on today’s network infrastructure, which requires significant upgrades and additional build out just to maintain today’s user experience. What is needed to solve this problem is a more powerful networking building block that enables a softer, more dynamic network. The following is a look at the fundamental enabling technologies that will deliver the computing power, connectivity, bandwidth, performance and economics for tomorrow’s soft network infrastructure.

The trend of the evolving network

The Internet has fundamentally reshaped today’s network infrastructure. There are no longer separate voice and data networks, and Internet protocol (IP) is the dominant protocol for all interaction. The telecom core network continues to converge with the mobile and fixed line network. Wireless networks are moving from a macro-dominated structure to a heterogeneous network (HetNet) composed of a variety of cell sizes to improve coverage and capacity. Cloud based techniques are driving a renaissance in application design and data center construction. Cloud techniques are even being considered as an alternative to the field based radio access network (CRAN). The fixed line portion of the network is increasingly fiber optic based and data center connectivity is Ethernet dominated; moving towards 40/100Gbps to provide the capacity needed for present and future applications. By delving deeper into the new ways networks are being used, significant changes in several areas have been exposed.

First, the transport of video is now commonplace and the traffic is not limited to just YouTube streams. Video chat and live event streaming are becoming more common. The impact is that real-time video is present on the upstream in much higher volumes than ever before. The traditional asymmetrical network, with much higher downlink data throughput than uplink, can throttle the uplink data traffic and degrade the real time video performance. Users are consuming video (and everything else) on a variety of devices with varying screen resolutions and processing capabilities. This creates a need for network or data center based transrating and transcoding of the video content to manage network bandwidth and to enable a smooth user-streaming experience.

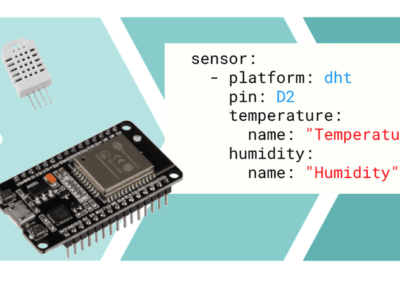

Second, M2M connectivity coupled with wireless sensors are creating the “internet of things” which generate realtime data, allowing remote control and processing of information for various devices through the Internet. Instead of being predominantly downlink heavy applications, these machine applications typically produce information that is sent upstream or to peers, putting additional pressure on the networks. Traditionally these system control and data acquisition (SCADA) networks were stand alone, private and therefore expensive networks. Leveraging the Internet for connectivity brings the cost down and opens up many new applications for real-time sensing, monitoring and control. M2M applications will have many more devices and many more transactions. Even though the data packet payload could be very small, the cumulative effect will be large and, in the case of video monitoring, the volume of traffic will be large. One alternative is to use smart endpoints and sensors that are able to preprocess the data they are collecting and to only send exceptions. This is particularily effective in video based systems that use video analytics. Near Field Communications (NFC) is another driver that will spawn millions , if not billions of M2M network attachments as retail terminals, vending machines and kiosks adopt NFC as their preferred payment system.

The third factor is the emergence of cloud based applications and cloud computing. The challenge of cloud computing is largely how to scale the data center, without mushrooming electricity consumption to the point of killing the economics of the business model. Cloud based data centers need massive parallel high-performance processors for the application processing and high capacity packet processing. Today, these data centers use standard servers for their compute platform, but as more and more users rely on cloud services, data centers need to be able to scale. There is an emerging trend towards using specialized processors to assist scaling by delivering processing performance at a lower cost and power consumption than general-purpose processors. Often the introduction of specialized processors necessitates a partitioning of the application, which drives a requirement for more and better intra server communication. For this reason, it makes sense to integrate specialized application processing with packet processing and high capacity network switching wherever possible.

The impact on network architecture

Traditionally, the Internet has been built for the web-browsing model of small upstream requests and a downstream burst. New traffic patterns are emerging to leverage private and public cloud based applications. Data centers are being redesigned to handle global scale and to apply analytics to the “big data” they are privy to. And as mentioned earlier, millions of smart devices are connecting via the Internet forming M2M networks often coupled with wireless sensors. As the network evolves and the variety of devices and payloads expands, the traditional Internet (built from switches and routers assuming a client server networking model) will be challenged. Internet traffic relying on many different hardware switches and proprietary routers in different locations is difficult to manage. It can also slow down network traffic, decrease reliability and decrease security with increased cost. The traditional Internet client server model with asymmetrical bandwidth needs to be overhauled. IP will continue to be the dominant protocol, but flow-based networks under active software management control and more intimately linked with the processing resources will better serve the high volume of “east-west” connections. This calls for a more open network, a “softer” network, where traffic management and routing is flow based and software controllable.

Optimal SoC approach for the future network

Today, traditional networking equipment and processing servers are, for the most part, developed separately. For the past two years, wireless base stations have begun a migration from this paradigm to a model based on a system-on-chip (SoC). The industry is quickly approaching the point where specialized processing elements in the data center need to be closely aligned with the network processing and switching. This trend will dictate that networking equipment and servers will adopt the SoC strategy, embraced by base stations. Leveraging a SoC approach, optimized for different applications, provides strong hardware foundation for network innovation. Instead of designing one size to fit all, the optimal SoC solution must select the right processor for the right task. For example, to improve efficiency and lower power consumption, an effective strategy would include the selection of low-power ARM® for general purpose and control processing, rather than power hungry general purpose server processors, and utilization of low-power digital signal processors (DSP) for efficient video and image processing. SoCs that incorporate both ARM RISC cores and DSP cores are ideal for specialized server applications that supplement the general-purpose servers.

The integration of a high speed, high throughput, flexible and intelligent networking subsystem into the processing SoC takes scalable networking solutions to the next level. Specialized hardware accelerators can also be incorporated into the SoC to accelerate packet and network security processing to reduce latency and software complexity. Ethernet, providing scalable throughput from 1-100Gbps, provides an ideal interconnect for both the external network and for intra server communications within the data center. As a standardized and widely adopted interconnect, Ethernet provides seamless interoperability to other network devices. Integrating Ethernet switches into the SoC reduces system cost and footprint. Leveraging the emerging software defined network can improve network performance and network reliability while enhancing security and manageability.

The OpenFlow standard is emerging as a key enabler of software defined network. OpenFlow is based on the concept of separation of the data and control planes. A similar separation strategy has been successfully deployed with telecom soft switches for control of media gateways (MGW) over the last decade. OpenFlow provides an open interface for Ethernet traffic switching, and switch management that can be centralized instead of determined by individual routers as its currently done in today’s network. This enables network operators to optimize the network investment, reduce the network hardware cost and enable innovative new network applications and services based on flows.

The embedded networking in the SoCs will evolve to support OpenFlow with external software controls and a control protocol to define data flows by applying dynamic configurable flow tables that direct the network traffic. Once OpenFlow enabled SoCs are in place, the ability to use software to control the network traffic routing will enable network managers to deploy new protocols and strengthen network security and resiliency. Additionally, network managers will be able to control the network topology to manage quality of service (QoS), without extensive hardware changes. Integrating Ethernet switching into the SoC eliminates the need for external switches and reduces hardware cost and complexity, resulting in increased system scalability. SoC based solutions combining processing and networking will ultimately deliver the highest performance and interconnect bandwidth per watt and provide the best way to scale both application processing and network capacity.

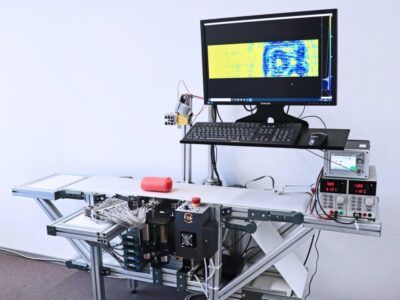

The following figure shows an example of scalable SoC hardware architecture that will unlock the potential of the future network.

There are several emerging SoC solutions that will enable networks to keep up with the explosive capacity demands now being placed on networks. Powerful SoC based hardware will enable network flows rather than routing protocol rules to dynamically control connectivity, secure access and priority. Traffic shaping and policing will be coupled with high-performance packet processing and switching hardware. These network elements will be embedded with DSP and ARM RISC processing elements so that high-performance application processing is no more than a tick away from the network. Ironically, the evolution of SoC hardware will drive the soft network revolution.

About the Authors

Zhihong Lin is a strategic marketing manager for Texas Instruments’ multicore processors group, responsible for defining and planning key requirements for multicore SoCs. Lin joined TI as a software design lead for the industry’s first integrated RF and baseband mobile DTV receiver in 2005. She has over 18 years of experience in the communications and networking industries and authored multiple technical papers on multicore technology and applications. Lin holds an M.S. in electrical engineering from the University of Texas at Dallas.

Tom Flanagan is the director of technical strategy for Texas Instruments’ multicore processors group. He has also served as technical strategy director for TI’s digital signal processing systems and broadband communications businesses. In his current role, he identifies market trends and provides the vision and strategic direction for TI’s multicore group. Flanagan has an extensive background in the semiconductor industry and in voice and data networking, and holds several patents. Flanagan received his bachelor’s degree of science from James Madison University.

Pekka Varis, CTO for the Texas Instruments’ multicore processors group drives the company’s strategy and development efforts for packet-based multicore processing. Varis played a key role in developing TI’s Multicore Navigator, a unique system element of TI’s new KeyStone multicore architecture. He has over 14 years of experience in the communications infrastructure industry including implementing platform software and architecting data plane blades for mobile networks. Varis holds an M.S. in Electrical Engineering from the Helsinki University of Technology and is a member of group technical staff at TI.

If you enjoyed this article, you will like the following ones: don't miss them by subscribing to :

eeNews on Google News

If you enjoyed this article, you will like the following ones: don't miss them by subscribing to :

eeNews on Google News